快速开始

LangSmith 是一个用于构建生产级 LLM 应用程序的平台。它允许您密切监控和评估您的应用程序的性能和成本,并对其进行优化。

兼容说明

安装依赖项

前置条件

确保您的环境满足以下要求:

- Python 3.7 或更高版本

- 已创建 Lighthouse LLM 应用

- 已获取应用的密钥和公钥

pip install -U langsmith openai

创建 API 密钥

要创建 API 密钥,请前往 Lighthouse 「LLM 应用/应用分析/新建 LLM 应用」 页面,然后单击「新建 API Key」(创建 API 密钥)。

设置您的环境

export LANGSMITH_TRACING=true

export LANGSMITH_ENDPOINT="<your-lighthouse-endpoint>"

export LANGSMITH_API_KEY="<your-lighthouse-api-key>"

export LANGSMITH_PROJECT="<your-lighthouse-project>"

export OPENAI_API_URL="<your-openai-api>"

export OPENAI_API_KEY="<your-openai-api-key>"

配置建议

推荐使用环境变量方式进行配置,这样可以更好地管理敏感信息。

定义您的应用程序

以下是一个简单的 RAG 应用示例,您也可以替换为自己的代码:

from openai import OpenAI

openai_client = OpenAI()

# This is the retriever we will use in RAG

# This is mocked out, but it could be anything we want

def retriever(query: str):

results = ["Harrison worked at Kensho"]

return results

# This is the end-to-end RAG chain.

# It does a retrieval step then calls OpenAI

def rag(question):

docs = retriever(question)

system_message = """Answer the users question using only the provided information below:

{docs}""".format(docs="\n".join(docs))

return openai_client.chat.completions.create(

messages=[

{"role": "system", "content": system_message},

{"role": "user", "content": question},

],

model="gpt-4o-mini",

)

跟踪 OpenAI 调用

from openai import OpenAI

from langsmith.wrappers import wrap_openai

import os

openai_client = wrap_openai(OpenAI(base_url=os.environ["OPENAI_API_URL"],api_key=os.environ["OPENAI_API_KEY"]))

# This is the retriever we will use in RAG

# This is mocked out, but it could be anything we want

def retriever(query: str):

results = ["Harrison worked at Kensho"]

return results

# This is the end-to-end RAG chain.

# It does a retrieval step then calls OpenAI

def rag(question):

docs = retriever(question)

system_message = """Answer the users question using only the provided information below:

{docs}""".format(docs="\n".join(docs))

return openai_client.chat.completions.create(

messages=[

{"role": "system", "content": system_message},

{"role": "user", "content": question},

],

model="glm-4-flash",

)

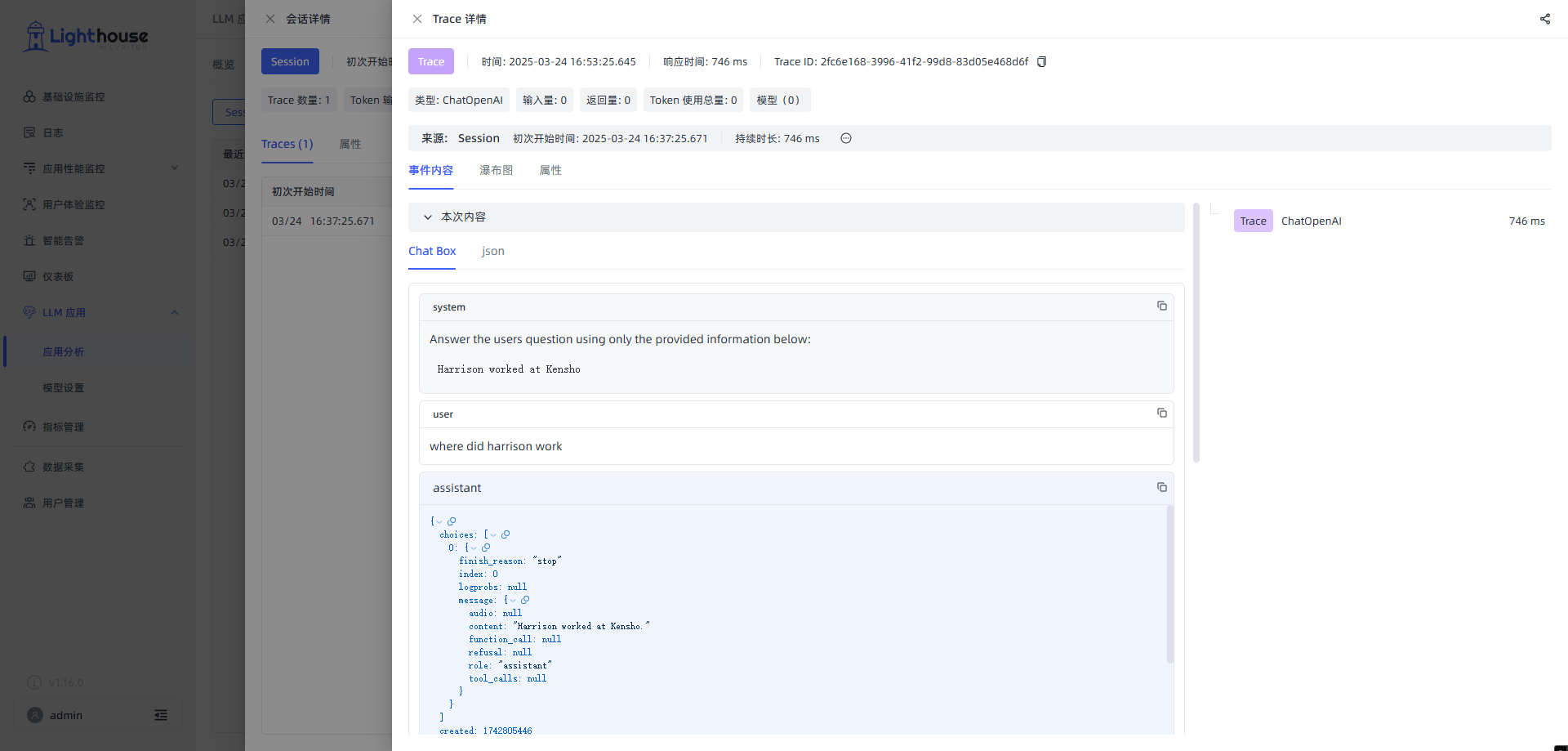

现在,当您按如下方式调用应用程序时:

rag("where did harrison work")

将在 Lighthouse 新建的项目中生成 OpenAI 调用的跟踪。它应该看起来像这样:

跟踪整个应用程序

from openai import OpenAI

from langsmith import traceable

from langsmith.wrappers import wrap_openai

import os

openai_client = wrap_openai(OpenAI(base_url=os.environ["BASE_URL"],api_key=os.environ["OPENAI_API_KEY"]))

# This is the retriever we will use in RAG

# This is mocked out, but it could be anything we want

def retriever(query: str):

results = ["Harrison worked at Kensho"]

return results

# This is the end-to-end RAG chain.

# It does a retrieval step then calls OpenAI

@traceable

def rag(question):

docs = retriever(question)

system_message = """Answer the users question using only the provided information below:

{docs}""".format(docs="\n".join(docs))

return openai_client.chat.completions.create(

messages=[

{"role": "system", "content": system_message},

{"role": "user", "content": question},

],

model="glm-4-flash",

)

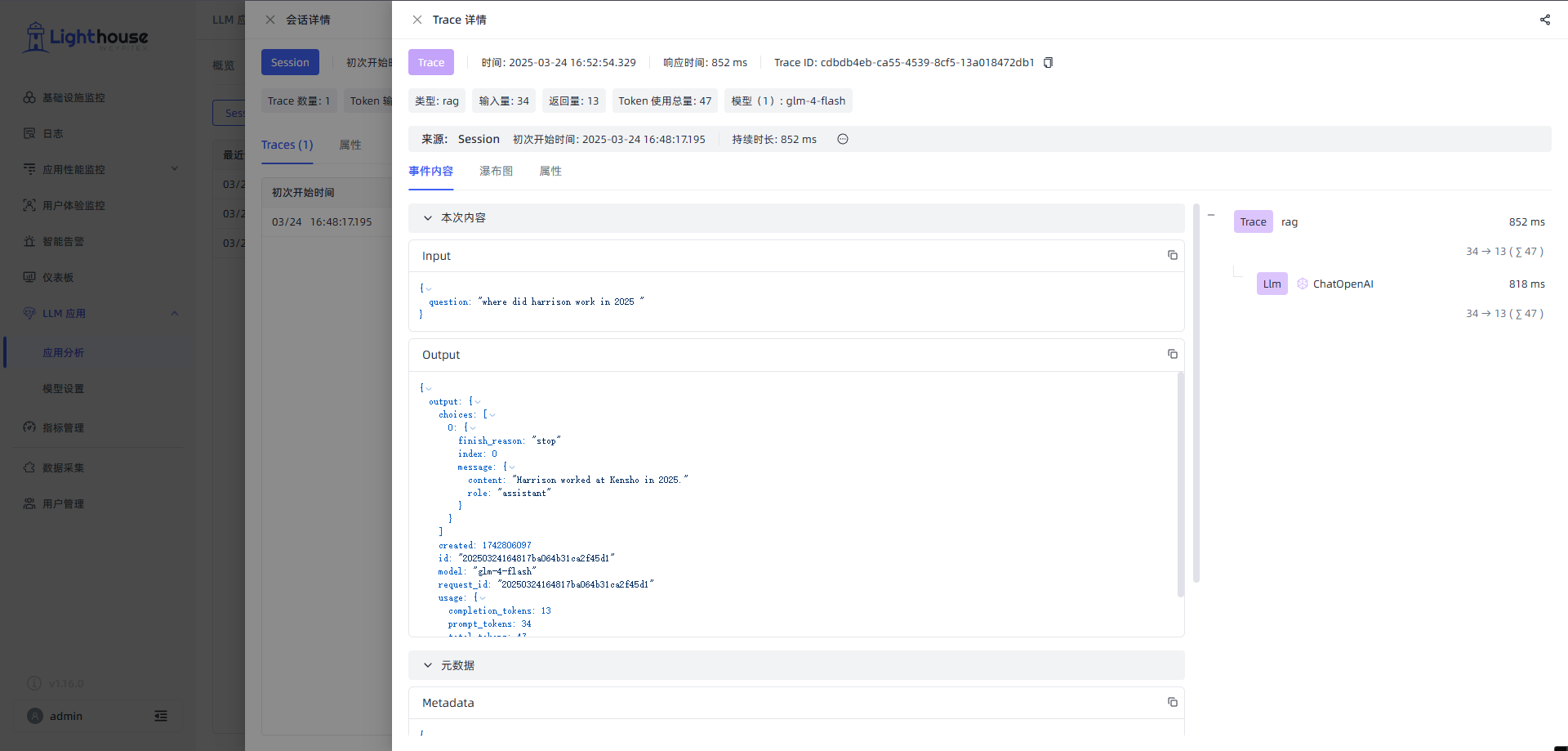

result = rag("where did harrison work in 2025 ")

print(result.choices[0].message.content)

这将生成整个管道的跟踪(将 OpenAI 调用作为子运行)- 它应该看起来像这样

框架支持

支持的框架

Lighthouse 通过 LangSmith SDK 支持以下主流框架:

- OpenAI

- LangChain

- LangGraph