快速开始

LangChain 是一个用于开发由大型语言模型(LLMs)驱动的应用程序的框架。

兼容说明

- 如果您使用 Python 开发自己的 AI 应用,可以继续阅读本文。

- Lighthouse 完全兼容 LangChain SDK,您可以使用 LangChain Python SDK 来采集 LLM 应用的可观测性数据。

安装依赖项

前置条件

确保您的环境满足以下要求:

- Python 3.7 或更高版本

- 已创建 Lighthouse LLM 应用

- 已获取应用的密钥和公钥

pip install langchain_openai langchain_core

创建 API 密钥

要创建 API 密钥,请前往 Lighthouse 「LLM 应用/应用分析/新建 LLM 应用」 页面,然后单击「新建 API Key」(创建 API 密钥)。

设置您的环境

export LANGSMITH_TRACING=true

export LANGSMITH_ENDPOINT="<your-lighthouse-endpoint>"

export LANGSMITH_API_KEY="<your-lighthouse-api-key>"

export LANGSMITH_PROJECT="<your-lighthouse-project>"

# This example uses OpenAI, but you can use any LLM provider of choice

export OPENAI_API_URL="<your-openai-api>"

export OPENAI_API_KEY="<your-openai-api-key>"

配置建议

推荐使用环境变量方式进行配置,这样可以更好地管理敏感信息。

跟踪 LLM 应用程序

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

import os

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant. Please respond to the user's request only based on the given context."),

("user", "Question: {question}\nContext: {context}")

])

model = ChatOpenAI(base_url=os.environ["OPENAI_API_URL"],api_key=os.environ["OPENAI_API_KEY"], model="glm-4-flash")

output_parser = StrOutputParser()

chain = prompt | model | output_parser

question = "Can you summarize this morning's meetings?"

context = "During this morning's meeting, we solved all world conflict."

result = chain.invoke({"question": question, "context": context})

print(result)

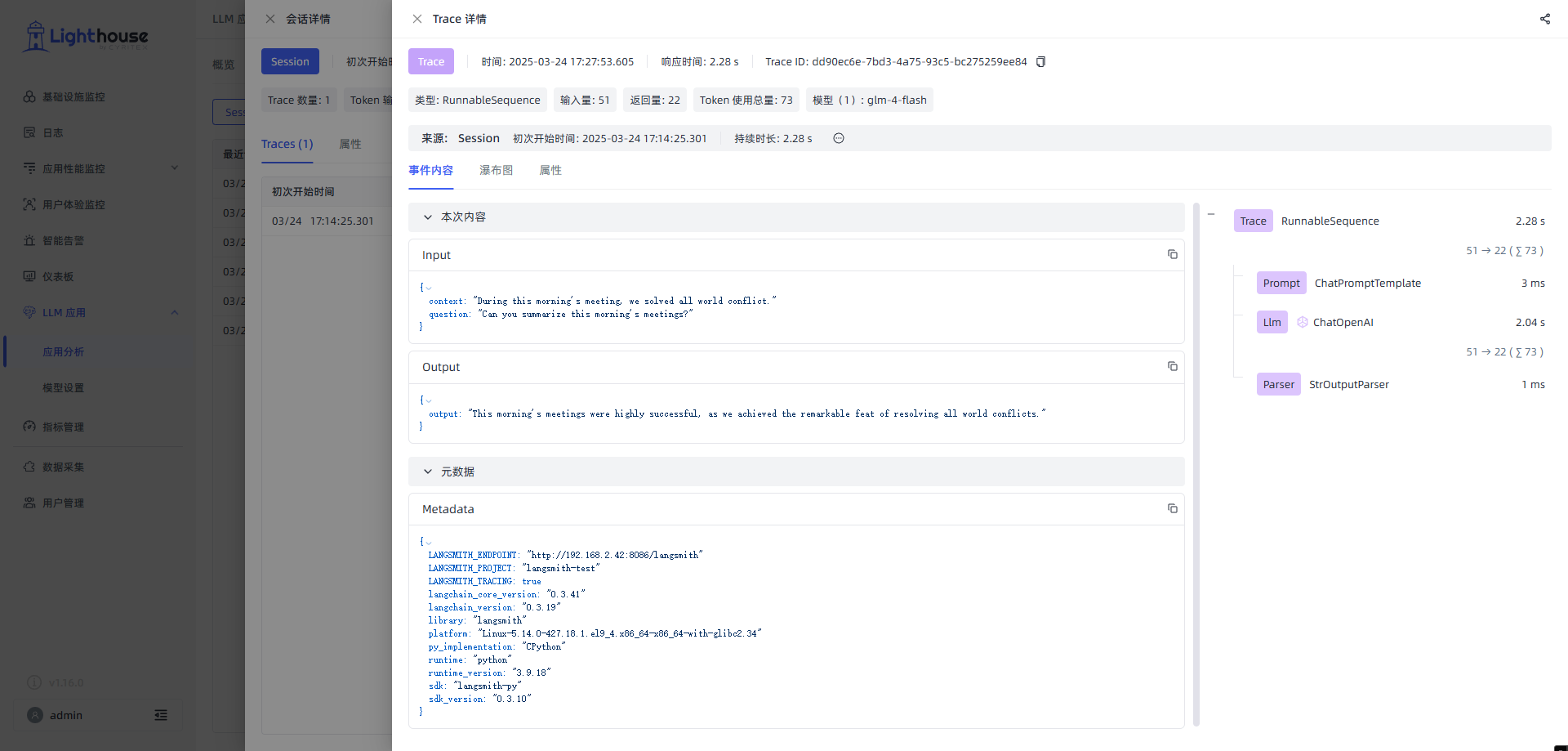

查看跟踪记录

执行后,您可以在 Lighthouse 项目中查看生成的 OpenAI 调用跟踪记录。效果如下图所示:

LangChain (Python) 和 LangSmith SDK 之间的互作性

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

import os

from langsmith import traceable

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant. Please respond to the user's request only based on the given context."),

("user", "Question: {question}\nContext: {context}")

])

model = ChatOpenAI(base_url=os.environ["OPENAI_API_URL"],api_key=os.environ["OPENAI_API_KEY"], model="glm-4-flash")

output_parser = StrOutputParser()

chain = prompt | model | output_parser

# The above chain will be traced as a child run of the traceable function

@traceable(

tags=["openai", "chat"],

metadata={"foo": "bar"}

)

def invoke_runnnable(question, context):

result = chain.invoke({"question": question, "context": context})

return "The response is: " + result

invoke_runnnable("Can you summarize this morning's meetings?", "During this morning's meeting, we solved all world conflict.")

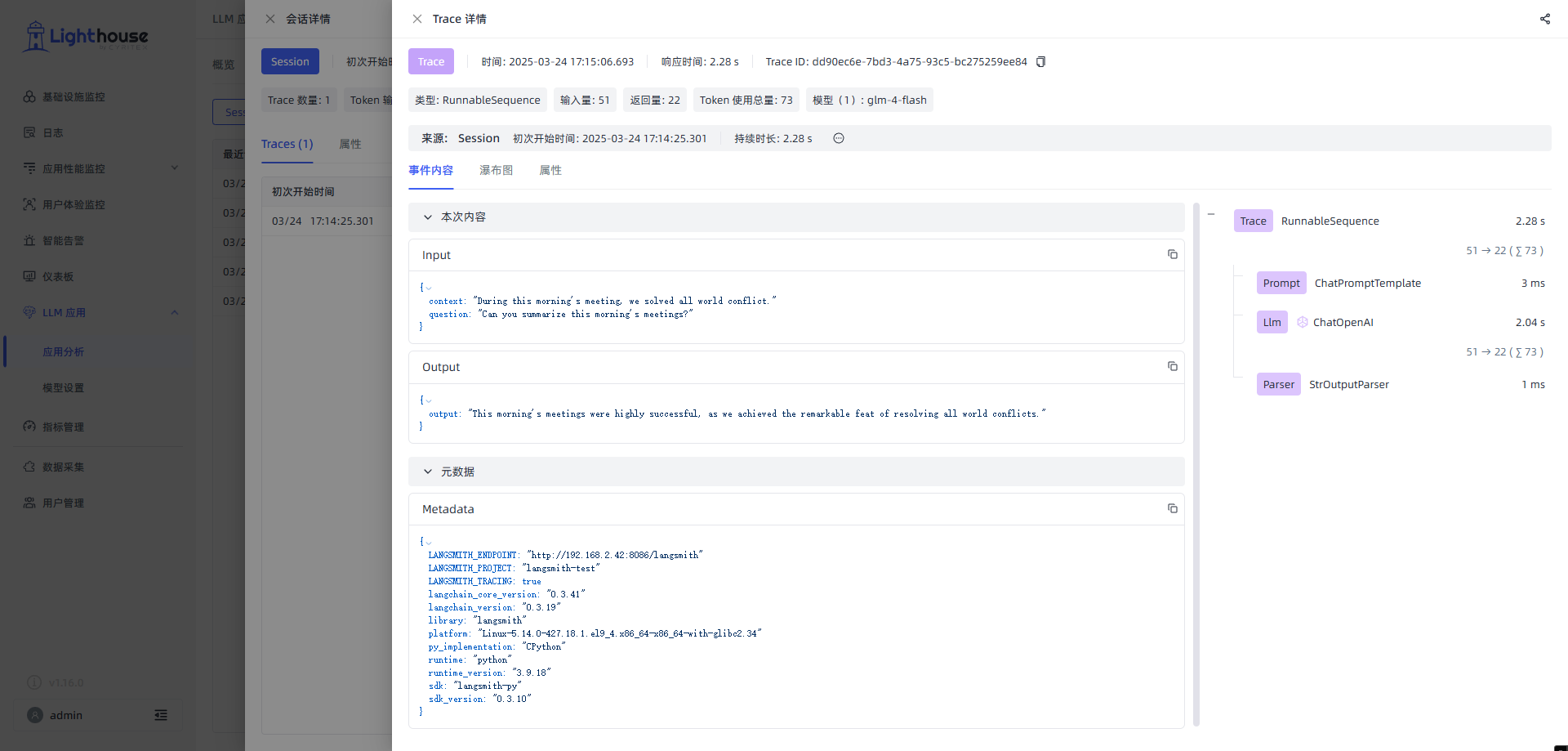

执行后将生成如下的跟踪树结构: